I am a content creator. As such, I create a lot of data files in the form of text, images, programs and well, data. And since so much of this content has my time and sweat invested, I care a lot about it. And it’s distributed over four different computers.

Driven by my paranoia to not loose any of my precious data, I’ve been looking for a unified solution to manage data across multiple computers for local and cloud backup. And that will handle at least 3 T bytes and be expandable. I think I’ve found it.

I wanted a process that would allow me to

- synchronize 100 G to 1,000 G of files seamlessly across my computers

- share 100 G of files with anyone

- automatically backup up to 3 T of files locally over wifi

- automatically backup up to 3 T of files to the cloud

- have accessible all the backed up files locally on my network and through the cloud

If all I wanted to do is back op 1 T of data on a few computers, I would just use dropbox. I could install it on each computer, and as long as I had a 1 T hard drive on each computer, I would be able to synchronize the files among each computer, and have it automatically backed up to the cloud.

Indeed, this is exactly what I used to do when my total data set was about 500 G. I loved dropbox and I still love drop box, but I can’t put it on an external hard drive or a NAS, and I don’t want to pay $799 a year for more than 1 T of dropbox space.

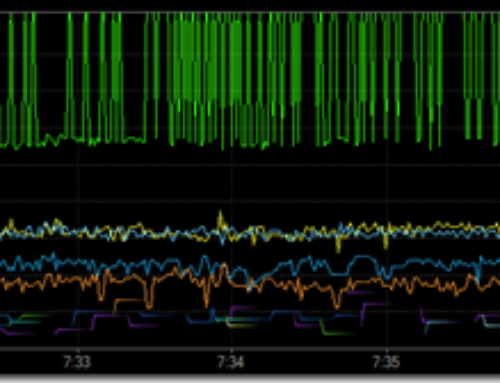

But now I have about 2 T of data among all four of my computers, and its growing, exponentially, just like hard drive capacity in the graph above. I can’t practically put all my data in dropbox and share it among all my computers.

It is often recommended to use a three tiered approach to backup: on the local computer, on the local network and remotely, offsite in the cloud. This results in three different copies, two different media and 1 remote copy. Here is how I implemented this strategy.

First line of defense is still Dropbox.

I pay $99/year for 1 T of drop box storage. Dropbox acts a shared folder on every computer it is installed on. Anything that is in that folder is automatically synchronized to all dropbox folders on all computers with the same account, and synchronized with an identical folder in the cloud. This is all done automatically over the net.

Since the folder is also on the local computer, it is all accessible to the computer when it is offline. It seamlessly re-sync’s this folder when next it is connected online. There are two special folders within the dropbox folder.

Any folder can be set up to be shared with someone outside the account. This allows a common shared and automatically synchronized folder for everyone with the shared folder on their computer. This is great for collaborating with others.

The second important special folder in dropbox is the public folder. Any file placed in this folder can be downloaded by anyone. The individual file in the public folder is sync’d to the cloud.

I can get a public link to a copy of the file on the cloud. I can give this link out and anyone can click on it and automatically download the file. This is great for giving anyone access to a specific file . When there are multiple files to distribute, I put them in a zip file.

Any file I want to access on multiple computers, I place in the dropbox folder. This way, it doesn’t matter which computer I am on. I can access the same file and as long as I am connected to the net, it is automatically sync’d to the other computers. I edit or change the file and the edits appear across all the computers in almost real time.

As long as I have the software application on each computer, it doesn’t matter which computer I do my work on. They are all equivalent.

But this is for only about 200 G bytes of data, the size of my smallest hard drive.

The second line of defense is a network attached storage (NAS) hard drive.

I have a Western Digital 3 T hard drive connected by Ethernet cable to my router which can be accessed through my home wifi network. I can access the hard drive from any computer in my home network. I use this hard drive as my local automated backup.

The hard drive is also rated as a “cloud storage” device. This means, in principle, it has an IP address and can be accessed remotely for any web browser. I find it to be an awkward interface and not very fast. As cloud storage, it defeats the purpose of being off site storage.

The best automated back up tool I have found is actually an automated synchronization tool, AllwaySync.

I set up a synchronization job to automatically sync the folder on each computer which contains all the data I care about. This is often the My Documents folder, but can be anywhere I store all my data. This folder is sync’d to an identical folder on the NAS hard drive. Everyday, the folder on the computer is synchronized to the NAS. folder, automatically. I usually schedule this for 2 am, each day.

Even better, all the files on the NAS are in normal readable format. I can access them from any computer on the network, effortlessly, in the same organization as on the computer on which they were created.

This provides an automatic back up of any data I want to secure and local access. This is the second leg of the three legged stool of robust backup.

My NAS is currently 3 T of storage. This is plenty to store all the data from all the computers I have now. I suspect it will last me for another year.

While this is an excellent way of backing up my files and having them all available from any device, it is still local storage. If the house burns down, or the hard drive crashes, I’m screwed. I still want off site storage.

Third step: off site storage and backup

I needed an off site storage that allowed for more than 3 T of storage and could be set up for automatic backup. I reviewed all the options and decided on Crashplan.

For $59/year, I get unlimited storage and automatic cloud backup of one computer. This means any internal or external hard drive connected to this computer.

I can set up a synchronization schedule and the hard drive files will be backed up to their cloud server. Using their simple application, I can access the files on their cloud server and download any files I want to any device.

While the bandwidth is not huge, I can often get 5 to 7 Mbps upload speeds, limited by my external WAN connection. For 100 Gbyte of data, this is 800 Gb of data. At 8 Mbps, it would take 800 G/8 M sec = 800,000 seconds to upload 100 GB of data. This is about 8 days. This means initial back up will take a long time. 1 TB of data will take 80 days to back up. But, it will be backed up and it will be automatic.

Once the files are backed up, it is only incremental changes that are uploaded. This is why it is good to have local access to all the files- rather than in the cloud. It would take too long to access and download large files from the cloud, compared to local storage.

This is the same approach as in microprocessor memory. There is L1 cache that is on the die which can be accessed really fast, there is L2 that is in DRAM, also pretty fast, and then there is L3 that is usually the hard drive.

The only downside with this approach is that I cannot backup a NAS with crashplan. The hard drive has to be attached to the computer subscribed to the account.

To fix this problem, I went out an bought another Western Digital 4 TB hard drive that is attached with USB to my single desktop computer. I use AllwaySync to automatically synchronize all my data to the 4 TB drive and use crashplan to backup this hard drive.

It’s a little redundant, having two large hard drives in the house with the same data, but the 4 Tb HD was only $130 and this protects against a drive crash of the NAS. Since my NAS is about 4 years old now, and is located in the same room as the cat litter, I do worry about the dust and cat hair it may see and might expect a hard drive crash in the not too distance future.

I set up the synchronization so that all the data from all my computers is backed up to the NAS every day and the NAS is backed up to the 4 TB HD which is then backed up to the cloud every day.

The approach I adopted is not so unique. It is similar to at least one other blogger. His set up is shown below, very similar to mine.

Once set up, all the backups are handled seamlessly and automatically as long as my devices are connected to the network. And I sleep easy at night.